Developers using AI tools like ChatGPT often encounter tokens. This guide answers common questions about how lines of code turn into tokens and what that means for coding tasks.

What are tokens in the context of AI tools?

Tokens are pieces of text used by AI models like ChatGPT. They range from one character to one word and affect how the model processes and generates text. The number of tokens affects the context window, which is how much text the AI can handle at once.

How is the number of tokens calculated from lines of code?

The token count in a line of code depends on the programming language and its complexity. Different languages have different syntax and structure, leading to variations in token count. More verbose languages with longer keywords and more punctuation will generate more tokens per line.

Token Conversion for Code

100 lines of code translate to about 1,000 tokens, depending on the language and code complexity.

Assuming the context window of ChatGPT is 4,096 tokens, you can fit approximately 400 lines of code within the context window.

This average helps in estimating the token usage but for precise calculation, using an online tokenizer like the OpenAI Tokenizer is recommended.

Python

Python code is typically more concise due to its syntax and lack of semicolons and braces.

100 lines of Python code translate to about 1,000 tokens. This estimate can vary based on the specific nature of the code, such as the use of libraries and the complexity of functions.

JavaScript

JavaScript code generally have shorter lines that are less dense with syntax. Hence the token count per line is lower.

100 lines of JavaScript code equate to about 700 tokens. The use of various libraries and frameworks can influence this number.

SQL

SQL code, with its specific keywords and structured query language format, results in higher density per line and more tokens.

100 lines of SQL code amount to about 1,150 tokens. The exact number can change based on how complex the queries are and how long the table and column names are.

Token Cost Calculation

The token costs for GPT-4o are as follows:

- Input: $5.00 per million tokens

- Output: $15.00 per million tokens

Sending 100 lines of code to GPT-4o would cost approximately $0.005.

Generating 100 lines of code from GPT-4o would cost approximately $0.015.

For more detailed token cost calculations, refer to ChatGPT Plus vs API: Cost Comparison.

Why is understanding token limits important?

Understanding token limits is crucial as they directly affect the context window and response limits of AI tools like ChatGPT.

The context window determines how much text the AI can consider at one time, influencing the accuracy and relevance of its responses. Exceeding token limits can lead to truncated outputs, where the AI might not process or generate the complete required information.

How can developers manage token limits effectively?

Managing token limits involves a few strategies:

- Breaking down large files into smaller pieces.

- Focusing on relevant code and context for each task helps optimize input.

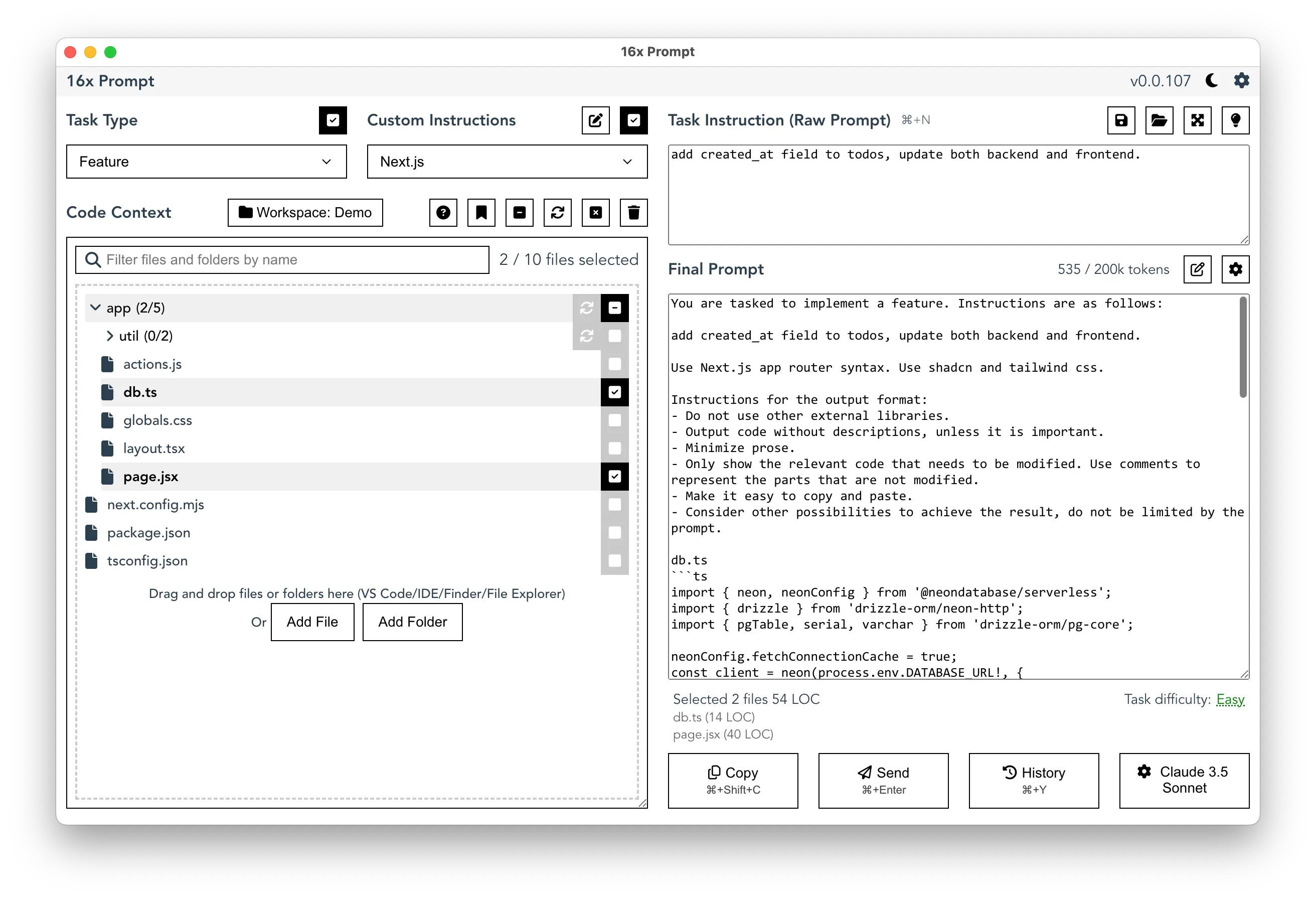

Tools like 16x Prompt can help keep track of token usage to avoid exceeding limits, as well as manage the source code context effectively.