- 16x Prompt vs ChatGPT / Claude

- 16x Prompt vs Cursor

- 16x Prompt vs aider

- 16x Prompt vs Cline / Roo Code

- 16x Prompt vs Repo Prompt

16x Prompt vs ChatGPT / Claude

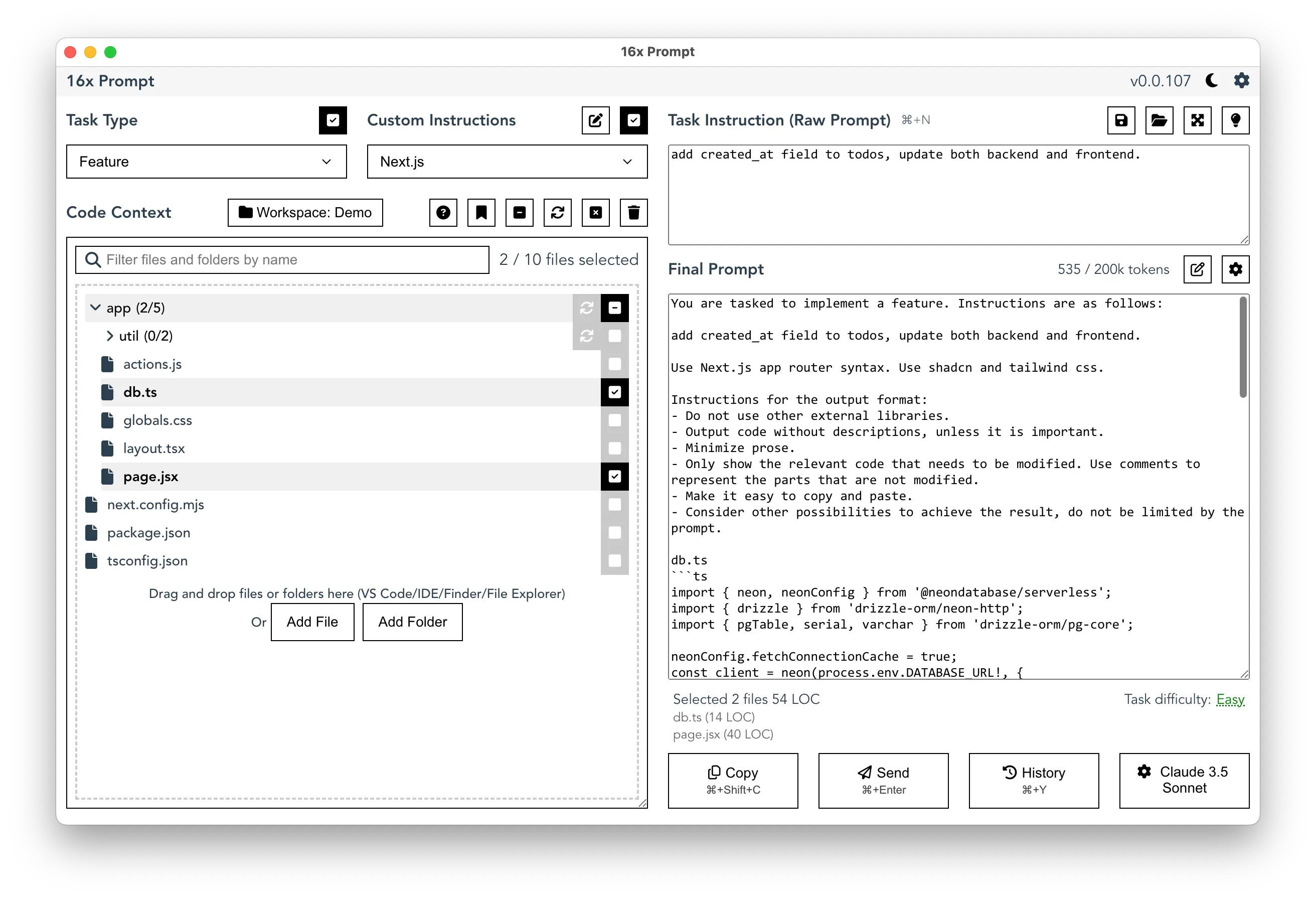

16x Prompt is designed to be used together with ChatGPT or Claude. Instead of writing prompt directly, you "compose" it in 16x Prompt and then copy-paste it into the LLM or send it to the model via API.

There are several advantages of this approach:

Source code context

You can manage your source code files locally within 16x Prompt app and choose which files to include in the prompt. They are linked to your local file system and kept up-to-date automatically.

This eliminates the need to copy-paste code from your code editor or upload files for each prompt.

Code Editing Features

16x Prompt now includes code editing features, allowing you to apply AI-generated code edits directly within the app. You can review changes, edit code inline, and apply modifications without leaving the workspace.

Formatting instructions

By default, ChatGPT doesn't come with formatting instructions, resulting in response that are too long or too verbose.

If you turn on the Custom Instructions feature in ChatGPT, it might pollute your non-coding conversations and make ChatGPT output code for general questions.

16x Prompt embeds formatting instructions into the prompt, making the output compact and suitable for copy-pasting directly into your code editor.

You can also create different custom formatting instructions in 16x Prompt to fit your needs, for example, one for React and another for Python.

16x Prompt vs Cursor

Similar comparison can be made with other AI editors like Windsurf and PearAI

Access to Premium Models

To use premium models like GPT-4.1 / Claude Sonnet 4 in Cursor, you need to either pay for API usage or use a paid plan.

16x Prompt allows you to access these premium models for free, by utilizing your existing ChatGPT / Claude account and your existing paid monthly subscription. Simply copy-paste the final prompt generated in 16x Prompt into ChatGPT or Claude to use these models.

16x Prompt also has built-in API integration for GPT-4.1 and Claude Sonnet 4, allowing you to use these models directly within the app by entering your own API key (BYO API). You can also compare the outputs of different models side by side.

Full Model Power

Cursor makes code changes directly in your code editor, which means it needs to generate the code at diff level so that the changes can be directly applied. This necessitates Cursor to use extra tooling on top of the model to generate the diffs. The extra tooling reduces the effectiveness of the underlying model as it needs to take in extra system prompt and tool definitions.

16x Prompt, on the other hand, is designed to generate code at the task level without explicitly generating diffs, so it can leverage the full power of the model. Code editing in 16x Prompt happens after the model has generated the code as the second step, so it does not interfere with the model's raw output when completing the task.

Context Management

Cursor does not have a global context manager to manage which files to include as relevant context. You need to manually include files in each prompt. The automatic context detection feature in Cursor (RAG) is not as reliable as it can include too much or too little context.

16x Prompt has a global context manager to manage which files to include as relevant context. You can include files in the context manager and use them across tasks.

Pricing and Cost

In terms of raw cost, 16x Prompt is cheaper than Cursor.

- Cursor charges monthly subscription fees plus usage-based costs, while 16x Prompt is a one-time purchase which allows you to either leverage existing ChatGPT / Claude subscription, or use your own API key.

- Cursor needs to generate code in diff format, which requires more API calls and tokens, increasing the cost.

Black-box vs transparent model

Cursor is a black-box tool. You don't have control over the final prompt that is sent to the model.

16x Prompt uses a transparent model, which means you have full control over the final prompt. You can customize or edit the final prompt via a range of options.

16x Prompt vs aider

Command line vs desktop app

aider is a command line tool. It requires you to run commands in the terminal to add source code files as context.

16x Prompt is a desktop app that provides a graphical user interface for composing prompts and managing source code files.

Final output

aider generates code and git commits directly.

16x Prompt generates prompts that you can either copy-paste into ChatGPT or send to the model via API.

Black-box vs transparent model

aider is a black-box tool. You don't have control over the final prompt that is sent to the model.

16x Prompt uses a transparent model, which means you have full control over the final prompt. You can customize or edit the final prompt via a range of options.

Pricing and Cost

aider is free and open-source. However, the cost of using APIs can be high since aider uses long system prompts and multiple API calls for code generation.

16x Prompt is a one-time purchase. You can use your existing ChatGPT account to access premium models like GPT-4 and Claude 3.5 Sonnet. It does not have long system prompts so the API cost is much lower.

16x Prompt vs Cline / Roo Code

Final output

Cline generates code and edits directly in your code editor.

16x Prompt generates prompts that you can either copy-paste into ChatGPT or send to the model.

Black-box vs transparent model

Cline is a black-box tool. You don't have control over the final prompt that is sent to the model via API.

16x Prompt uses a transparent model, which means you have full control over the final prompt. You can customize or edit the final prompt via a range of options.

Pricing and Cost

Cline is free and open-source. However, the cost of using APIs can be high since Cline uses long system prompts and multiple API calls for code generation.

16x Prompt is a one-time purchase. You can use your existing ChatGPT or Claude account to access premium models like GPT-4.1 and Claude Sonnet 4. It does not have long system prompts so the API cost is much lower.

16x Prompt vs Repo Prompt

For a detailed comparison between 16x Prompt and Repo Prompt, including cross-platform support, user interface, and operational transparency, please visit our dedicated Repo Prompt comparison page.

Table of Comparison

| 16x Prompt | ChatGPT | Cursor | |

|---|---|---|---|

| Type of Product | AI coding workspace | Chatbot | IDE/Code completion tool |

| Pricing | Lifetime License | Monthly Subscription | Monthly Subscription or Pay per API call |

| System Prompt | No system prompt | Complex hidden system prompt | Complex hidden system prompt |

| Access GPT-4.1 | Yes (via ChatGPT Plus or API) | Yes (via ChatGPT Plus) | Yes (Pay for API usage or paid plan) |

| Access Claude Sonnet 4 | Yes (via Claude Pro or API) | No | Yes (Pay for API usage or paid plan) |

| Manage Local Source Code Files | Yes | No | Yes |

| Code Editing | Yes | No | Yes |

| Output Formatting Instructions | Yes | Custom instructions (affect non-coding conversations) | No |

| Use Alternative Models | Yes | No | Yes |

| Final Output | Prompt Chat response Code | Chat response | Code Chat response |