ChatGPT is a powerful AI tool that can assist developers in writing code. However, it has a context window and token limit that can cause it to not follow the instructions or forget about earlier conversation. This post will explain what these limits are and how developers can work around them.

ChatGPT Context Window

A common complaint among developers using ChatGPT for coding assistance is that it sometimes fails to follow the instructions or forgets about earlier conversation. This is due to the context window and token limit of ChatGPT, which restrict the amount of information it can consider when generating responses.

The context window refers to part of the conversation history that ChatGPT considers when generating a response. Any messages beyond this window (sent earlier in the conversation) are not considered when generating the response. The context window is important because it determines how much of the conversation history ChatGPT can use to generate a response.

It is worth noting that the context window applies to both the input context (conversation history) and the output response generated by ChatGPT. For example, if there are currently 500 tokens generated in the response, it will reduce the number of tokens available for the context window for the conversation history by 500 tokens. If your context window is close to the token limit, the first 500 tokens of the conversation history will be ignored.

An important aspect that developers should understand is that each subsequent follow-up message in the same conversation will send the entire chat history (within the context window) to the LLM. This makes each follow-up message more expensive than the previous one in terms of token usage, as the entire conversation needs to be processed again.

For example, if your first message uses 100 tokens, the cost is just those 100 tokens. However, when you send a second message of 50 tokens, the API call actually costs 150 tokens because it includes both messages. A third message of 75 tokens would then cost 225 tokens, as it includes all previous messages within the context window.

This cumulative effect continues until you reach the context window limit, at which point older messages start getting truncated. For developers using the API, this means that longer conversations can significantly impact your token usage and costs.

ChatGPT Token Limit

The token limit of the context window is the maximum number of tokens that ChatGPT can consider within the context window. If the conversation history exceeds this token limit, ChatGPT will truncate the history and only consider the most recent tokens.

While there is no official documentation on the exact context window and token limit for ChatGPT, empirical evidence suggests that the token limit for the context window is around 4096 tokens (about 3000 words) to 8192 tokens (about 6000 words).

ChatGPT Output Token Limit

In addition to the context window token limit, ChatGPT also has a token limit for the output response. This limit determines the maximum number of tokens that ChatGPT can generate in a single response. If the response exceeds this token limit, ChatGPT will stop generating tokens and return the truncated response.

A post on OpenAI forum suggests that the output token limit for ChatGPT is 4096 tokens. This is consistent with our observations and testing.

Number of Tokens for Text

So how much text or code does this translate to?

According to OpenAI Tokenizer calculator and OpenAI Pricing FAQ:

A helpful rule of thumb is that one token generally corresponds to about 4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

For English text, 1 token is approximately 4 characters or 0.75 words. As a point of reference, the collected works of Shakespeare are about 900,000 words or 1.2M tokens.

So, 4096 tokens would correspond to approximately 3000 words, and 8192 tokens would correspond to around 6000 words.

Number of Tokens for Source Code

Here are more sample calculations for the number of tokens in source code for different programming languages:

React:

- React jsx (100 lines): 700 tokens

- React jsx (200 lines): 1,500 tokens

SQL:

- SQL script (100 lines): 1,150 tokens

- SQL script (200 lines): 2,500 tokens

Python:

- Python source code file (100 lines): 1,000 tokens

- Python source code file (200 lines): 1,700 tokens

So if you are working with several large source code files with thousands of lines, you might exceed the token limit of ChatGPT's context window.

Working Around the Limitations

To work around the context window and token limit, you can take the following approaches:

-

Break Down Inputs: Instead of feeding the entire source code file at once, break it down into smaller chunks and feed them sequentially to ChatGPT. This way, you can ensure that the context window and token limit are not exceeded.

-

Use Relevant Context: Focus on providing the most relevant context to ChatGPT. Instead of feeding the entire conversation history, provide only the most recent and relevant information to generate accurate responses.

-

Optimize Code: If you are working with large source code files, consider optimizing the code to reduce the number of tokens. Remove unnecessary comments, whitespace, and redundant code to make the input more concise.

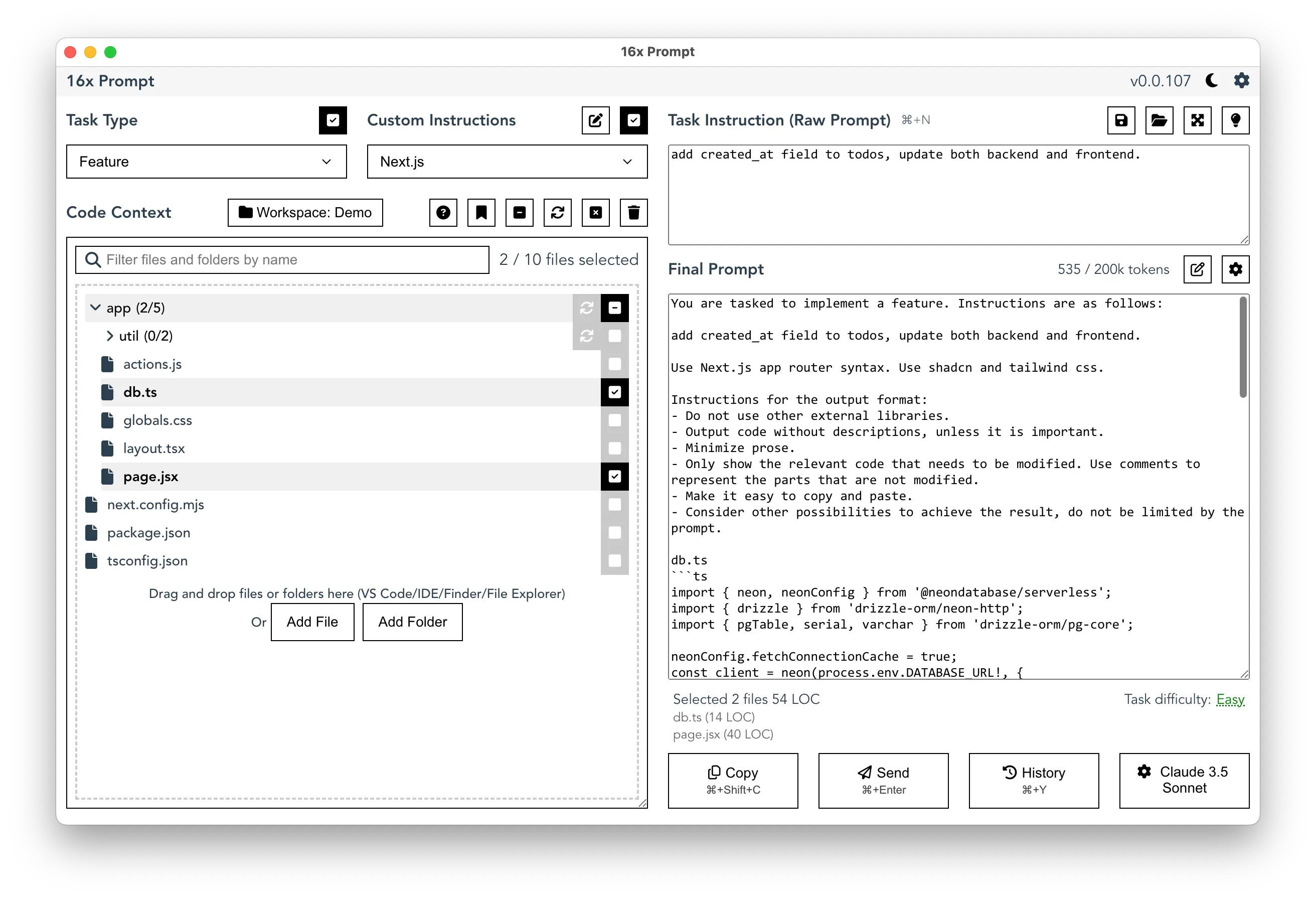

Using a workflow automation tools like 16x Prompt can help streamline the process of breaking down inputs and managing the context window and token limit effectively:

- Code Context Management: You can select which source code files to include in the context window and manage the token limit effectively.

- Token Limit Monitoring: Keep track of the token count to ensure that you do not exceed the limit.

- Code Optimization: The tool can help you optimize the code by removing unnecessary comments, whitespace, and redundant code to reduce the token count.

- Code Refactoring: You can leverage the tool to perform refactoring tasks to break down large code files into smaller, more manageable chunks.