As artificial intelligence becomes essential to software development, enterprises face a complex landscape of potential benefits balanced by significant risks. Key concerns include intellectual property (IP) protection, data privacy, and regulatory compliance.

This post looks at how businesses can deal with these challenges effectively when integrating AI coding tools.

Enhancing Privacy and Compliance

Privacy and compliance are crucial for enterprises under strict regulations like GDPR, HIPAA, and CCPA. Adopting AI tools requires not only adherence to these regulatory standards but also active improvement to safeguard sensitive information.

Data classification is essential for privacy and compliance

Effective strategies should include detailed data governance frameworks. These should clearly outline protocols for handling data and managing breaches. For example, a financial services company might put in place a framework that includes data classification, access control based on roles, and regular audits to ensure compliance with financial regulations.

Putting in place robust privacy policies and conducting regular compliance audits are critical to maintaining trust and legal compliance.

To further improve privacy and compliance, enterprises should use advanced anonymizing techniques. These techniques protect user data by ensuring it cannot be traced back to any individual without authorization.

Data anonymization protects user privacy

Managing IP Risks with Enterprise-Level LLMs

To mitigate intellectual property risks in the use of AI, enterprises are turning to specialized large language models (LLMs) designed for high compliance and security. These models come equipped with robust safeguards to prevent IP infringement and ensure that sensitive data is handled securely. By implementing these enterprise-level LLMs, companies can effectively align AI use with corporate IP policies.

ChatGPT for Enterprise is an example of an AI coding tool that offers IP protection and assurances for businesses.

ChatGPT for Enterprise offers security and privacy

It offers data integrity by not utilizing sensitive data for model training and by following rigorous security standards like SOC 2 compliance. This approach ensures IP security within organizational boundaries. It gives businesses confidence to use AI technologies and protect their intellectual assets.

By using enterprise-level LLMs such as ChatGPT for Enterprise, businesses can manage potential IP risks associated with AI deployment more effectively. These solutions not only align with internal corporate IP policies but also provide the necessary assurances that intellectual property remains protected within a secure and compliant framework.

Alternatively, companies can also use API-based services that offer similar IP protection and compliance guarantees, such as using GPT-4 via OpenAI's API, which is CCPA, GDPR, SOC 2 and SOC 3 compliant.

OpenAI's API offers security and compliance guarantees

Avoiding IP Leakage with Local Deployment

Another effective strategy is local deployment. By hosting AI models on their own servers, companies maintain control over the technology and prevent sensitive data from being exposed to external threats. This approach not only secures the data but also makes the AI tools more responsive to the specific needs of the business.

Tools such as Tabby offer an open-source, self-hosted AI coding assistant that enables companies to keep their AI operations entirely in-house. By deploying Tabby locally, businesses can avoid the risk of IP leakage to external parties and ensure that their own data remains secure within their own infrastructure.

However, the downside of local deployment is that the local models (StarCoder, DeepSeek Coder) are not as powerful as cloud-based models like ChatGPT for Enterprise. So, companies need to weigh the trade-offs between security and model performance when choosing between local and cloud-based deployment.

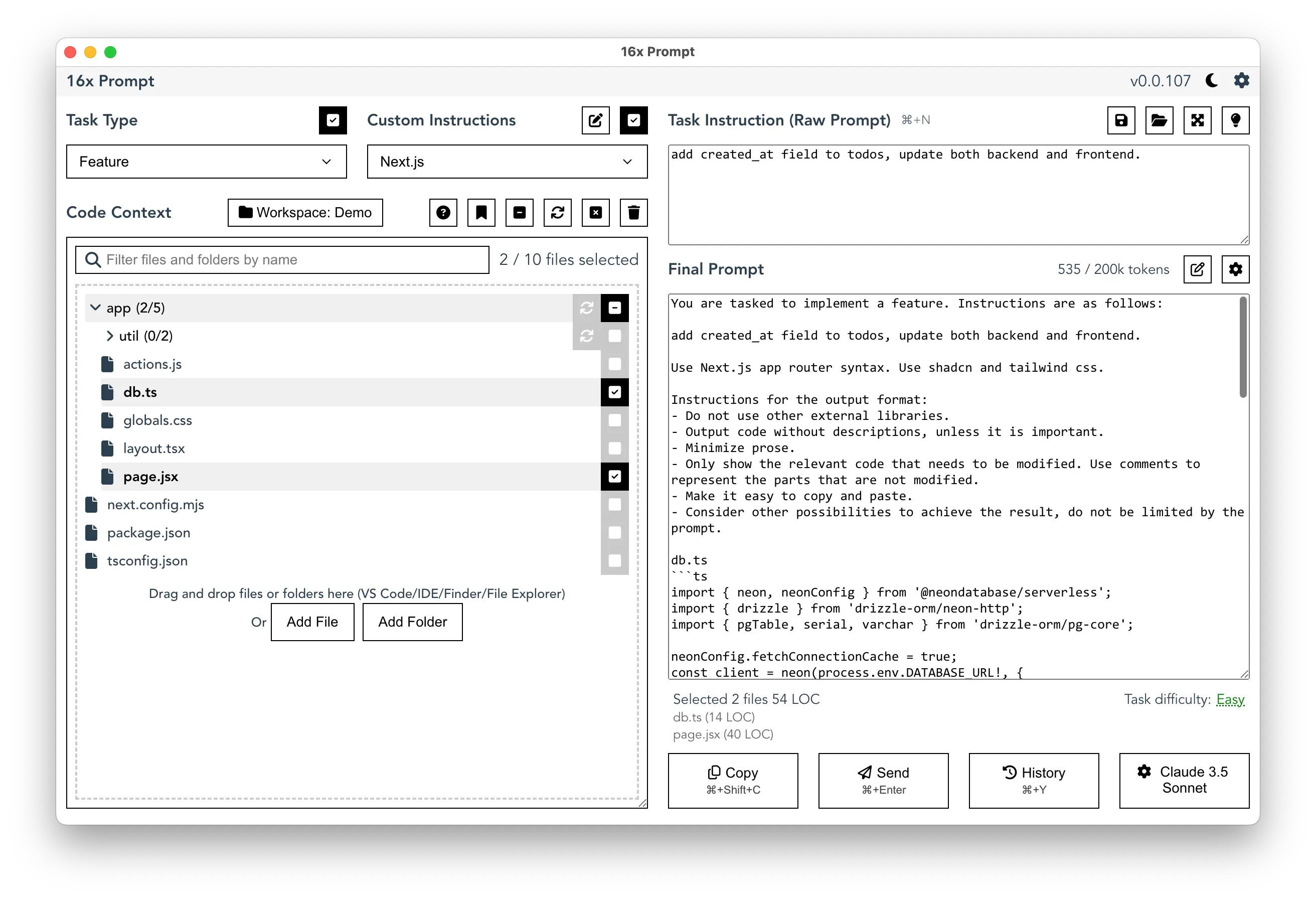

Instead of deploying models locally, companies can streamline existing workflows by using an offline desktop application like 16x Prompt together with cloud-based LLMs such as ChatGPT for Enterprise, enhancing efficient coding prompt creation.

16x Prompt is a desktop application for creating coding prompts

Managing the Risk of IP Infringement

One of the pivotal concerns for enterprises using AI tools like LLMs is the risk of inadvertently infringing on intellectual property (IP). This concern stems from the uncertainty around whether an LLM's training data might include copyrighted content, potentially leading to legal challenges for businesses deploying these technologies.

To directly address these concerns, Microsoft has introduced two significant commitments:

Copilot Copyright Commitment

Microsoft's Copilot Copyright Commitment assures customers that they can use Microsoft's Copilot services and the outputs they generate without worrying about copyright claims. The company provides a straightforward guarantee: if customers face copyright challenges, Microsoft will assume responsibility for the potential legal risks involved. This commitment allows businesses to use AI with more confidence, knowing that their legal exposure is minimized.

Azure OpenAI Service Copyright Protections

Additionally, Microsoft has expanded its copyright protection policy for commercial customers utilizing the Azure OpenAI Service. This service, which includes added governance layers on top of OpenAI models, now comes with a promise from Microsoft to defend and compensate for any adverse judgments arising from copyright infringement lawsuits linked to the use of the service or its outputs.

These initiatives form part of Microsoft's broader strategy to provide enterprise customers with secure and legally compliant frameworks for using generative AI technologies. By leveraging these enhanced protections, businesses can integrate AI solutions into their operations more confidently, knowing that they are safeguarded against significant IP-related legal exposure.

OpenAI Indemnification Policy

OpenAI also a similar indemnification policy for its API customers, offering protection against IP infringement claims arising from the use of its models:

10.1 By Us. We agree to defend and indemnify you for any damages finally awarded by a court of competent jurisdiction and any settlement amounts payable to a third party arising out of a third party claim alleging that the Services (including training data we use to train a model that powers the Services) infringe any third party intellectual property right.

Educating Employees on IP Risks

Additionally, it is crucial for companies to put in place strict policies and provide thorough training to employees regarding the use of AI tools. This training should emphasize the risks associated with using unauthorized AI technologies, which might expose corporate data to potential breaches.

Putting these measures in place not only helps safeguard the company's intellectual property but also ensures that staff are aware of and comply with security protocols.

Conclusion: Implementing AI with an Eye on Security

For enterprises, the adoption of AI coding tools requires a balanced approach that focuses on security, compliance, and IP protection. By selecting the right tools and implementing strict governance frameworks, companies can use the benefits of AI while minimizing risks to their operations and reputations.

Businesses are encouraged to evaluate their specific needs and look for AI solutions that offer the necessary assurances for privacy, compliance, and IP protection, ensuring a secure and compliant integration into their software development processes.